Tag: AI

Microsoft AI Skills Fest

The Microsoft AI Skills Fest is a 50-day learning event, running from April 8 until May 28, 2025. It’s all about leveling up AI skills with tailored content for tech pros, business managers, students, and public sector workers.

- AI Agents for Tech Pros – Dive into Azure AI Foundry & GitHub Copilot to create and fine-tune AI-driven tools.

- AI for Business Managers – Learn practical AI strategies that boost workplace efficiency. Leads to a pro certificate.

- Students & AI Literacy – Minecraft Education’s Fantastic Fairgrounds introduces AI concepts in an engaging way.

- AI in the Public Sector – Explore responsible AI, security, and decision-making tools to improve government services.

- Skill Challenges – Compete with global learners in AI challenges.

- Certification Discounts – Massive exam discounts & a chance to grab one of 50,000 free vouchers.

- Training Sessions – Exclusive workshops from Microsoft Training Services Partners.

- Festival Guide – A map to explore AI zones and experiences.

It’s worth checking out. Head over to https://aiskillsfest.event.microsoft.com/ and register.

Enjoy!

Festive Tech Calendar 2024 YouTube playlist

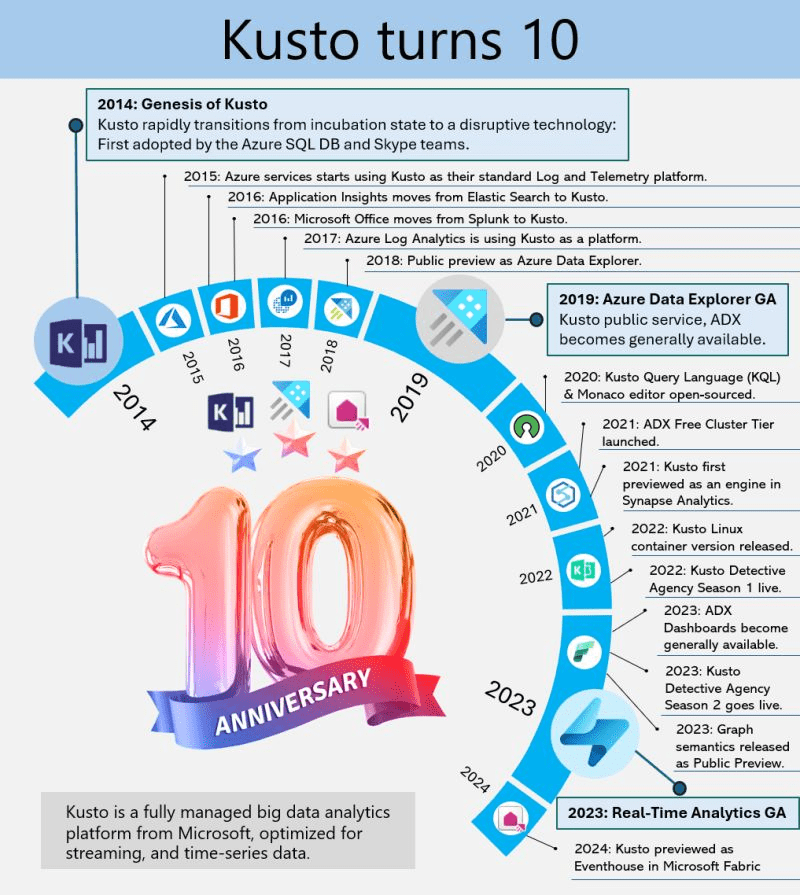

Kusto’s 10-Year Evolution at Microsoft

Kusto, the internal service driving Microsoft’s telemetry and several key services, recently marked its 10-year milestone. Over the decade, Kusto has evolved significantly, becoming the backbone for crucial applications such as Sentinel, Application Insights, Azure Data Explorer, and more recently, Eventhouse in Microsoft Fabric. This journey highlights its pivotal role in enhancing data processing, monitoring, and analytics across Microsoft’s ecosystem.

This powerful service has continually adapted to meet the growing demands of Microsoft’s internal and external data needs, underscoring its importance in the company’s broader strategy for data management and analysis.

A Dive into Azure Data Explorer’s Origins

Azure Data Explorer (ADX), initially code-named “Kusto,” has a fascinating backstory. In 2014, it began as a grassroots initiative at Microsoft’s Israel R&D center. The team wanted a name that resonated with their mission of exploring vast data oceans, drawing inspiration from oceanographer Jacques Cousteau. Kusto was designed to tackle the challenges of rapid and scalable log and telemetry analytics, much like Cousteau’s deep-sea explorations.

By 2018, ADX was officially unveiled at the Microsoft Ignite conference, evolving into a fully-managed big data analytics platform. It efficiently handles structured, semi-structured (like JSON), and unstructured data (like free-text). With its powerful querying capabilities and minimal latency, ADX allows users to swiftly explore and analyze data. Remembering its oceanic roots, ADX symbolizes a tribute to the spirit of discovery.

Enjoy!

References

Microsoft Build 2024 Book of News

What is the Book of News? The Microsoft Build 2024 Book of News is your guide to the key news items announced at Build 2024.

As expected there is a lot of focus on Azure and AI, followed by Microsoft 365, Security, Windows, and Edge & Bing. This year the book of news is interactive instead of being a PDF.

Some of my favourite announcements

Azure Cloud Native and Application Platform

Azure Functions

Microsoft Azure Functions is launching several new features to provide more flexibility and extensibility to customers in this era of AI.

Features now in preview include:

- A Flex Consumption plan that will give customers more flexibility and customization without compromising on available features to run serverless apps.

- Extension for Microsoft Azure OpenAI Service that will enable customers to easily infuse AI in their apps. Customers will be able to use this extension to build new AI-led apps like retrieval-augmented generation, text completion and chat assistant.

- Visual Studio Code for the Web will provide a browser-based developer experience to make it easier to get started with Azure Functions. This feature is available for Python, Node and PowerShell apps in the Flex Consumption hosting plan.

Features now generally available include:

- Azure Functions on Azure Container Apps lets developers use the Azure Container Apps environment to deploy multitype services to a cloud-native solution designed for centralized management and serverless scale.

- Dapr extension for Azure Functions enables developers to use Dapr’s powerful cloud native building block APIs and a large array of ecosystem components in the native and friendly Azure Functions triggers and bindings programming model. The extension is available to run on Azure Kubernetes Service and Azure Container Apps.

Azure Container Apps

Microsoft Azure Container Apps will include dynamic sessions, in preview, for AI app developers to instantly run large language model (LLM)-generated code or extend/customize software as a service (SaaS) apps in an on-demand, secure sandbox.

Customers will be able to mitigate risks to their security posture, leverage serverless scale for their apps and save months of development work, ongoing configurations and management of compute resources that reduce their cost overhead. Dynamic sessions will provide a fast, sandboxed, ephemeral compute suitable for running untrusted code at scale.

Additional new features, now in preview, include:

- Support for Java: Java developers will be able to monitor the performance and health of apps with Java metrics such as garbage collection and memory usage.

- Microsoft .NET Aspire dashboard: With dashboard support for .NET Aspire in Azure Container Apps, developers will be able to access live data about projects and containers in the cloud to evaluate the performance of .NET cloud-native apps and debug errors.

Azure App Service

Microsoft Azure App Service is a cloud platform to quickly build, deploy and run web apps, APIs and other components. These capabilities are now in preview:

- Sidecar patterns is a way to add extra features to the main app, such as logging, monitoring and caching, without changing the app code. Users will be able to run these features alongside the app and it is supported for both source code and container-based deployments.

- WebJobs will be integrated with Azure App Service, which means they will share the same compute resources as the web app to help save costs and ensure consistent performance. WebJobs are background tasks that run on the same server as the web app and can perform various functions, such as sending emails, executing bash scripts and running scheduled jobs.

- GitHub Copilot skills for Azure Migrate will enable users to ask questions like, “Can I migrate this app to Azure?” or “What changes do I need to make to this code?” to get answers and recommendations from Azure Migrate. GitHub Copilot licenses are sold separately.

These capabilities are now generally available:

- Automatic scaling continuously adjusts the number of servers that run apps based on a combination of demand and server utilization, without any code or complex scaling configurations. This helps users handle dynamically changing site traffic without over-provisioning or under-provisioning the app’s server resources.

- Availability zones are isolated locations within an Azure region that provide high availability and fault tolerance. Enabling availability zones lets users take advantage of the increased service level agreement (SLA) of 99.99%. For more information, reference the SLA for App Service.

- TLS 1.3 encryption, the latest version of the protocol that secures communication between apps and the clients, offers faster and more secure connections, as well as better compatibility with modern browsers and devices.

Azure Static Web Apps

To help customers deliver more advanced capabilities, Microsoft Azure Static Web Apps will offer a dedicated pricing plan, now in preview, that supports enterprise-grade features for enhanced networking and data storage. The dedicated plan for Azure Static Web Apps will utilize dedicated compute capacity and will enable:

- Network isolation to enhance security.

- Data residency to help customers comply with data management policies and requirements.

- Enhanced quotas to allow for more custom domains within an app service plan.

- “Always-on” functionality for Azure Static Web Apps managed functions, which provide built-in API endpoints to connect to backend services.

Azure Logic Apps

Microsoft Azure Logic Apps is a cloud platform where users can create and run automated workflows with little to no code. Updates to the platform include:

An enhanced developer experience:

- Improved onboarding experience in Microsoft Visual Studio Code: A simplified extension installation experience and improvements on project start and debugging are now generally available.

- Logic Apps Standard deployment scripting tools in Visual Studio Code: This feature will simplify the process of setting up a continuous integration/continuous delivery (CI/CD) process for Logic Apps Standard by providing support in the tooling to generalize common metadata files and automate the creation of infrastructure scripts to streamline the task of preparing code for automated deployments. This feature is in preview.

- Support for Zero Downtime deployment scenarios: This will enable Zero Downtime deployment scenarios for Logic Apps Standard by providing support for deployment slots in the portal. This update is in preview.

Expanded functionality and compatibility with Logic Apps Standard:

- .NET Custom Code Support: Users will be able to extend low-code workflows with the power of .NET 8 by authoring a custom function and calling from a built-in action within the workflow. This feature is in preview.

- Logic Apps connectors for IBM mainframe and midranges: These connectors allow customers to preserve the value of their workloads running on mainframes and midranges by allowing them to extend to the Azure Cloud without investing more resources in the mainframe or midrange environments using Azure Logic Apps. This update is generally available.

- Other updates, in preview, include Azure Integration account enhancements and Logic Apps monitoring dashboard.

Azure API Center

Microsoft Azure API Center, now generally available, provides a centralized solution to manage the challenges of API sprawl, which is exacerbated by the rapid proliferation of APIs and AI solutions. The Azure API Center offers a unified inventory for seamless discovery, consumption and governance of APIs, regardless of their type, lifecycle stage or deployment location. This enables organizations to maintain a complete and current API inventory, streamline governance and accelerate consumption by simplifying discovery.

Azure API Management

Azure API Management has introduced new capabilities to enhance the scalability and security of generative AI deployments. These include the Microsoft Azure OpenAI Service token limit policy for fair usage and optimized resource allocation, one-click import of Azure OpenAI Service endpoints as APIs, a Load Balancer for efficient traffic distribution and a Circuit breaker to protect backend services.

Other updates, now generally available, include first-class support for OData API type, allowing easier publication and security of OData APIs, and full support for gRPC API type in self-hosted gateways, facilitating the management of gRPC services as APIs.

Azure Event Grid

Microsoft Azure Event Grid has new features that are tailored to customers who are looking for a pub-sub message broker that can enable Internet of Things (IoT) solutions using MQTT protocol and can help build event-driven apps. These capabilities enhance Event Grid’s MQTT broker capability, make it easier to transition to Event Grid namespaces for push and pull delivery of messages, and integrate new sources. Features now generally available include:

- Use the Last Will Testament feature, in compliance with MQTT v5 and MQTT v.3.1.1 specifications, so apps receive notifications when clients get disconnected, enabling management of downstream tasks to prevent performance degradation.

- Create data pipelines that utilize both Event Grid Basic resources and Event Grid Namespace Topics (supported in Event Grid Standard). This means customers can utilize Event Grid namespace capabilities, such as MQTT broker, without needing to reconstruct existing workflows.

- Support new event sources, such as Microsoft Entra ID and Microsoft Outlook, leveraging Event Grid’s support for the Microsoft Graph API. This means customers can use Event Grid for new use cases, like when a new employee is hired or a new email is received, to process that information and send to other apps for more action.

Azure Data Platform

Real-Time Intelligence in Microsoft Fabric

The new Real-Time Intelligence within Microsoft Fabric will provide an end-to-end software as a service (SaaS) solution that will empower customers to act on high volume, time-sensitive and highly granular data in a proactive and timely fashion to make faster and more-informed business decisions. Real-Time Intelligence, now in preview, will empower user roles such as everyday analysts with simple low-code/no-code experiences, as well as pro developers with code-rich user interfaces.

Features of Real-Time Intelligence will include:

- Real-Time hub, a single place to ingest, process and route events in Fabric as a central point for managing events from diverse sources across the organization. All events that flow through Real-Time hub will be easily transformed and routed to any Fabric data stores.

- Event streams that will provide out-of-the-box streaming connectors to cross cloud sources and content-based routing that helps remove the complexity of ingesting streaming data from external sources.

- Event house and real-time dashboards with improved data exploration to assist business users looking to gain insights from terabytes of streaming data without writing code.

- Data Activator that will integrate with the Real-Time hub, event streams, real-time dashboards and KQL query sets, to make it seamless to trigger on any patterns or changes in real-time data.

- AI-powered insights, now with an integrated Microsoft Copilot in Fabric experience for generating queries, in preview, and a one-click anomaly detection experience, allowing users to detect unknown conditions beyond human scale with high granularity in high-volume data, in private preview.

- Event-Driven Fabric will allow users to respond to system events that happen within Fabric and trigger Fabric actions, such as running data pipelines.

New capabilities and updates to Microsoft Fabric

Microsoft Fabric, the unified data platform for analytics in the era of AI, is a powerful solution designed to elevate apps, whether a user is a developer, part of an organization or an independent software vendor (ISV). Updates to Fabric include:

- Fabric Workload Development Kit: When building an app, it must be flexible, customizable and efficient. Fabric Workload Development Kit will make this possible by enabling ISVs and developers to extend apps within Fabric, creating a unified user experience.This feature is now in preview.

- Fabric Data Sharing feature: Enables real-time data sharing across users and apps. The shortcut feature API allows seamless access to data stored in external sources to perform analytics without the traditional heavy integration tax. The new Automation feature now streamlines repetitive tasks resulting in less manual work, fewer errors and more time to focus on the growth of the business. These features are now in preview.

- GraphQL API and user data functions in Fabric: GraphQL API in Fabric is a savvy personal assistant for data. It’s a RESTful API that will let developers access data from multiple sources within Fabric, using a single query. User data functions will enhance data processing efficiency, enabling data-centric experiences and apps using Fabric data sources like lakehouses, data warehouses and mirrored databases using native code ability, custom logic and seamless integration.These features are now in preview.

- AI skills in Fabric: AI skills in Fabric is designed to weave generative AI into data specific work happening in Fabric. With this feature, analysts, creators, developers and even those with minimal technical expertise will be empowered to build intuitive AI experiences with data to unlock insights. Users will be able to ask questions and receive insights as if they were asking an expert colleague while honoring user security permissions.This feature is now in preview.

- Copilot in Fabric: Microsoft is infusing Fabric with Microsoft Azure OpenAI Service at every layer to help customers unlock the full potential of their data to find insights. Customers can use conversational language to create dataflows and data pipelines, generate code and entire functions, build machine learning models or visualize results. Copilot in Fabric is generally available in Power BI and available in preview in the other Fabric workloads.

Azure Cosmos DB

Microsoft Azure Cosmos DB, the database designed for AI that allows creators to build responsive and intelligent apps with real-time data ingested and processed at any scale, has several key updates and new features that include:

- Built-in vector database capabilities: Azure Cosmos DB for NoSQL will feature built-in vector indexing and vector similarity search, enabling data and vectors to be stored together and to stay in sync. This will eliminate the need to use and maintain a separate vector database. Powered by DiskANN, available in June, Azure Cosmos DB for NoSQL will provide highly performant and highly accurate vector search at any scale. This feature is now in preview.

- Serverless to provisioned account migration: Users will be able to transition their serverless Azure Cosmos DB accounts to provisioned capacity mode. With this new feature, transition can be accomplished seamlessly through the Azure portal or Azure command-line interface (CLI). During this migration process, the account will undergo changes in-place and users will retain full access to Azure Cosmos DB containers for data read and write operations.This feature is now in preview.

- Cross-region disaster recovery: With disaster recovery in vCore-based Azure Cosmos DB for MongoDB a cluster replica can be created in another region. This cluster replica will be continuously updated with the data written in the primary region. In a rare case of outage in the primary region and primary cluster unavailability, this replica can be promoted to become the new read-write cluster in another region. Connection string is preserved after such a promotion, so that apps can continue to read and write to the database in another region using the same connection string. This feature is now in preview.

- Azure Cosmos DB Vercel integration: Developers building apps using Vercel can now connect easily to an existing Azure Cosmos DB database or create new Azure Try Cosmos DB accounts on the fly and integrate them to their Vercel projects. This integration improves productivity by creating apps easily with a backend database already configured. This also helps developers onboard to Azure Cosmos DB faster. This feature is now generally available.

- Go SDK for Azure Cosmos DB: The Go SDK allows customers to connect to an Azure Cosmos DB for NoSQL account and perform operations on databases, containers and items. This release brings critical Azure Cosmos DB features for multi-region support and high availability to Go, such as the ability to set preferred regions, cross-region retries and improved request diagnostics. This feature is now generally available.

Click here to read the Microsoft Build 2024 Book of News!

Enjoy!

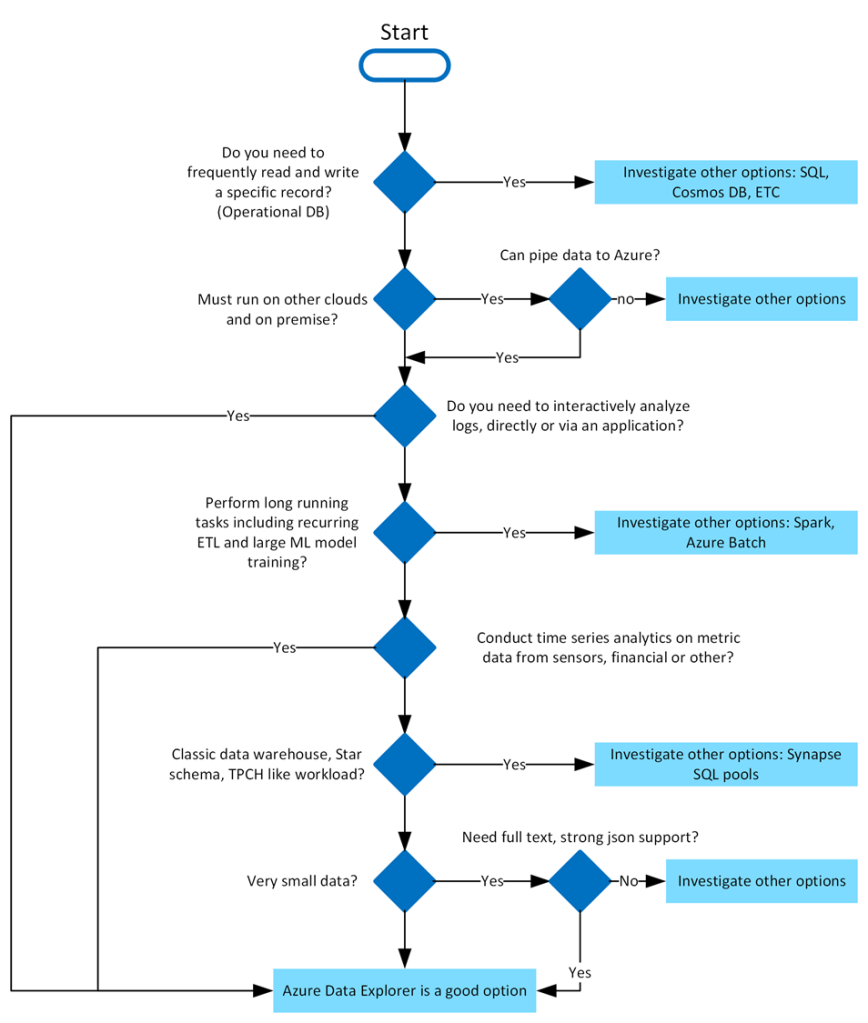

Discovering Insights with Azure Data Explorer

For the past few months, I’ve been diving into learning Azure Data Explorer (ADX) and using it for a few projects. What is Azure Data Explorer, and what would I use it for? Great questions. Azure Data Explorer is like your data’s best friend when it comes to real-time, heavy-duty analytics. It’s built to handle massive amounts of data—whether it’s structured, semi-structured, or all over the place—and turn it into actionable insights. With its star feature, the Kusto Query Language (KQL), you can dive deep into the data for tasks like spotting trends, detecting anomalies, or analyzing logs, all with ease. It’s perfect for high-speed data streams, making it a go-to for IoT and time-series data. Plus, it’s secure, scalable, and does the hard work fast so you can focus on making more intelligent decisions.

When to use Azure Data Explorer

Azure Data Explorer is ideal for enabling interactive analytics capabilities over high-velocity, diverse raw data. Use the following decision tree to help you decide if Azure Data Explorer is right for you:

What makes Azure Data Explorer unique

Azure Data Explorer stands out due to its exceptional capabilities in handling vast amounts of diverse data quickly and efficiently. It supports high-speed data ingestion (terabytes in minutes) and querying of petabytes with millisecond-level results. Its Kusto Query Language (KQL) is intuitive yet powerful, enabling advanced analytics and seamless integration with Python and T-SQL. With specialized features for time series analysis, anomaly detection, and geospatial insights, it’s tailored for deep data exploration. The platform simplifies data ingestion with its user-friendly wizard, while built-in visualization tools and integrations with Power BI, Grafana, Tableau, and more make insights accessible. It also automates data ingestion, transformation, and export, ensuring a smooth, end-to-end analytics experience.

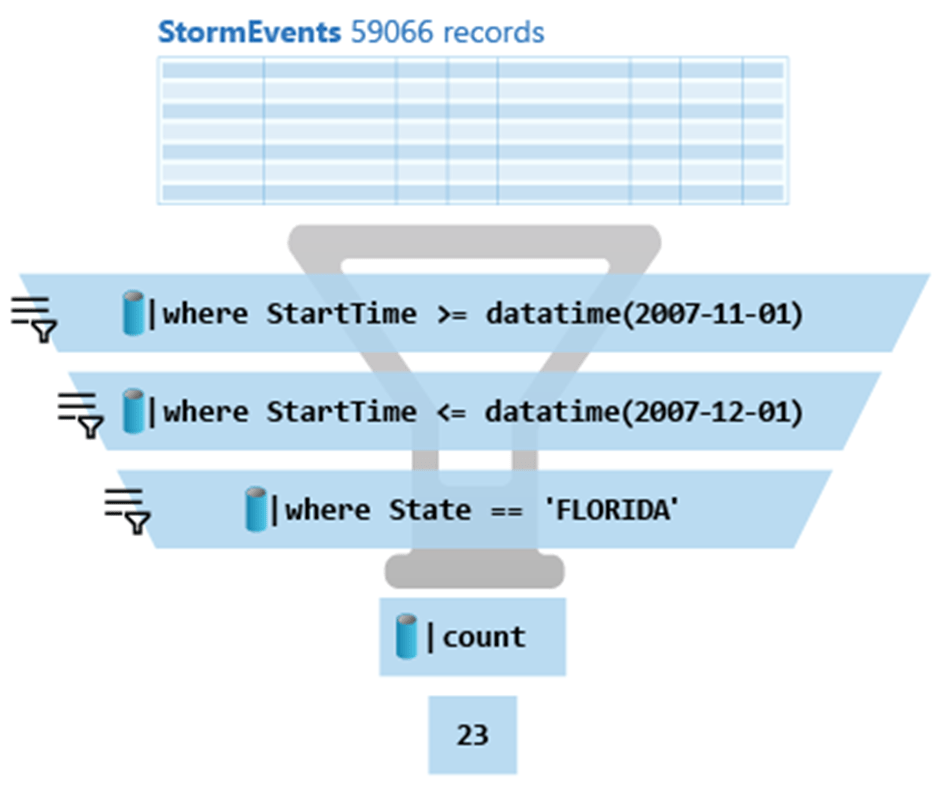

Writing Kusto queries

In Azure Data Explorer, we use the Kusto Query Language (KQL) to write queries. KQL is also used in other Azure services like Azure Monitor Log Analytics, Azure Sentinel, and many more.

- A Kusto query is a read-only request to process data and return results.

- Has one or more query statements and returns data in a tabular or graph format.

- Statements are sequenced by a pipe (|).

- Data flows, or is piped, from one operator to the next.

- The data is filtered/manipulated at each step and then fed into the following step.

- Each time the data passes through another operator, it’s filtered, rearranged, or summarized.

Here is the above query:

StormEvents

| where StartTime >= datetime(2007-11-01)

| where StartTime <= datetime(2007-12-01)

| where State == 'FLORIDA'

| countAzure Data Explorer query editor also supports the use of T-SQL in addition to its primary query language, Kusto query language (KQL). While KQL is the recommended query language, T-SQL can be useful for tools that are unable to use KQL. For more details, check out how to query data with T-SQL.

Using commands to manage Azure Data Explorer tables

When it comes to writing commands for managing tables, the first character of the text of a request determines if the request is a management command or a query. Management commands must start with the dot (.) character, and no query may start with that character.

Here are some examples of management commands:

- .create table

- .create-merge table

- .drop table

- .alter table

- .rename column

Getting started

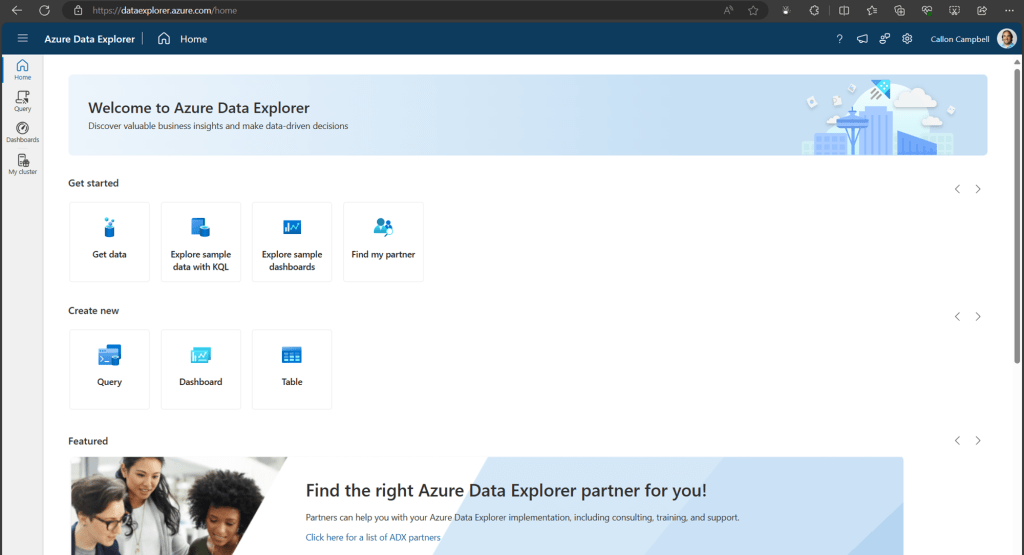

You can try Azure Data Explorer for free using the free cluster. Head over to https://dataexplorer.azure.com/ and log in with any Microsoft Account.

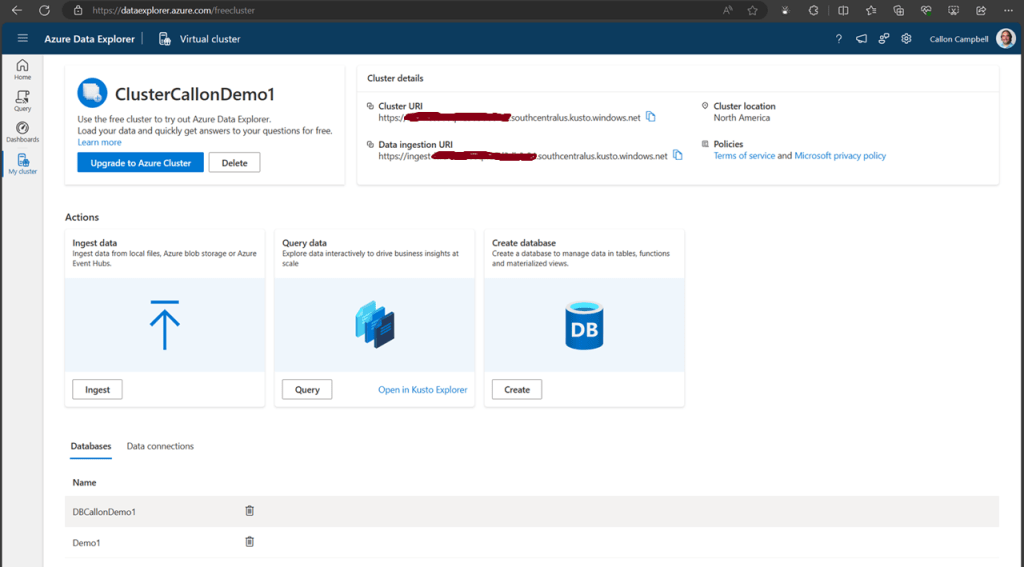

Navigate to the My cluster tab on the left to get access to your cluster URI.

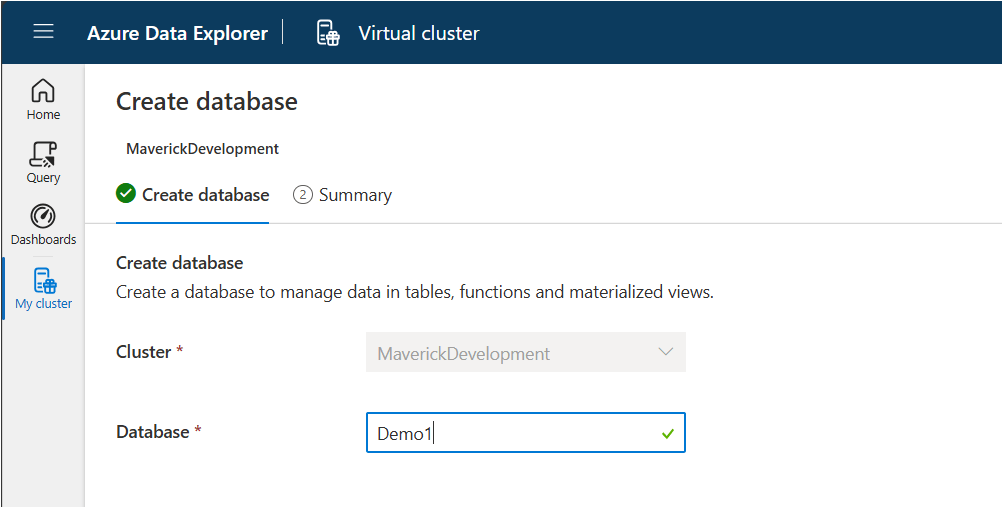

Next, let’s create a new database. While on the My cluster tab, click on the create database button. Give your database a name. In this case, I’m using ‘Demo1’ and then click on the ‘NextCreateDatebase’ button.

Now navigate over to the Query table and lets create our first table, insert some data and run some queries.

Creating a table

.create-merge table customers

(

FullName: string,

LastOrderDate: datetime,

YtdSales: decimal,

YtdExpenses: decimal,

City: string,

PostalCode: string

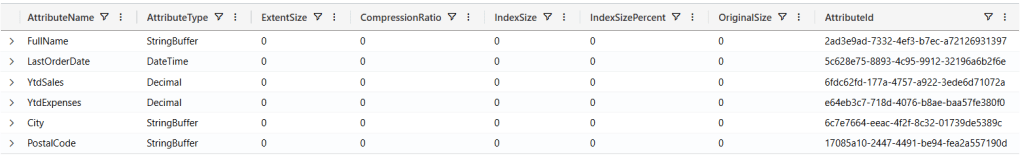

)If we run the .show table customers command, we can see the table definition:

.show table customers

Ingesting data

There are several ways we can ingest data into our table. Here are a few options:

- Ingest from Azure Storage

- Ingest from a Query

- Streaming Ingestion

- Ingest Inline

- Ingest from an application

Today we’re going to be using the inline ingestion, which goes as follows:

.ingest inline into table customers <|

'Bill Gates', datetime(2022-01-10 11:00:00), 1000000, 500000, 'Redmond', '98052'

'Steve Ballmer', datetime(2022-01-06 10:30:00), 150000, 50000, 'Los Angeles', '90305'

'Satya Nadella', datetime(2022-01-09 17:25:00), 100000, 50000, 'Redmond', '98052'

'Steve Jobs', datetime(2022-01-04 13:00:00), 100000, 60000, 'Cupertino', '95014'

'Larry Ellison', datetime(2022-01-04 13:00:00), 90000, 80000, 'Redwood Shores', '94065'

'Jeff Bezos', datetime(2022-01-05 08:00:00), 750000, 650000, 'Seattle', '98109'

'Tim Cook', datetime(2022-01-02 09:00:00), 40000, 10000, 'Cupertino', '95014'

'Steve Wozniak', datetime(2022-01-04 11:30:00), 81000, 55000, 'Cupertino', '95014'

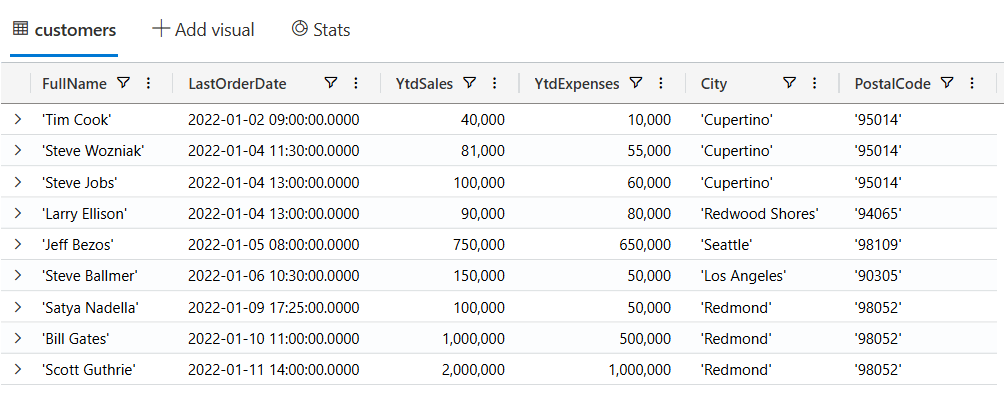

'Scott Guthrie', datetime(2022-01-11 14:00:00), 2000000, 1000000, 'Redmond', '98052'Querying data

Now, let’s start writing KQL queries against our data. In the following query I’m just using the name of the table with no where clause. This is similar to the “SELECT * FROM Customers” in SQL.

customers

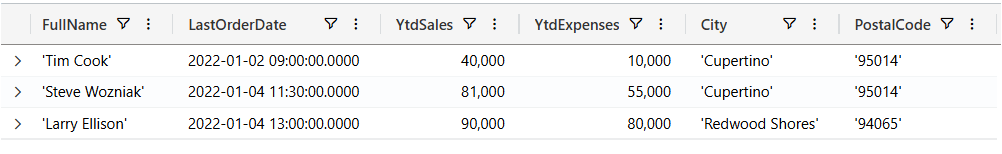

Now let’s filter our data looking for customers where the YtdSales is less than $100,000:

customers

| where YtdSales < 100000

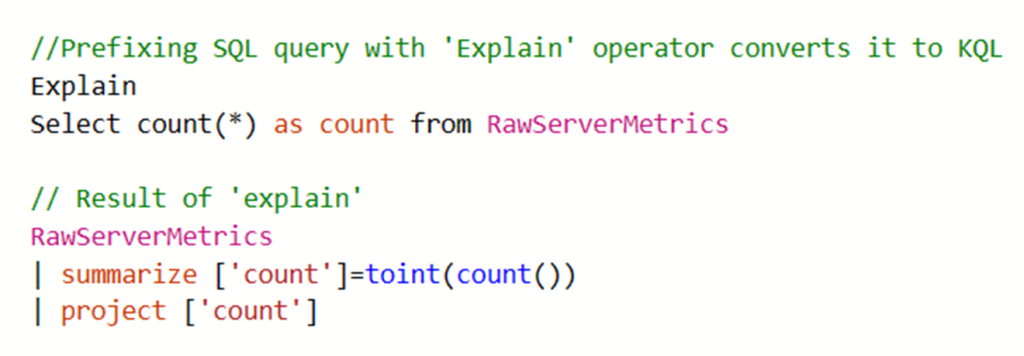

SQL to KQL

If you’re unfamiliar with KQL but are familiar with SQL and want to learn KQL, you can translate your SQL queries into KQL by prefacing the SQL query with a comment line, --, and the keyword explain. The output shows the KQL version of the query, which can help you understand the KQL syntax and concepts. Here is an example of the ‘EXPLAIN’ operator as follows:

Try the SQL to Kusto Query Language cheat sheet.

Wrapping up

In this post we looked at what Azure Data Explorer is, when it should be used, how to use the free personal cluster to create a sample database and ingest data and the run some queries. I hope this was insightful and I look forward to my next post where I’ll go deeper on ingesting data in real-time and running more complicated queries and how we can access this data from dashboards and APIs.

Enjoy!

References

GitHub Copilot Chat is now generally available

This week GitHub announced that GitHub Copilot Chat is now generally available. All GitHub Copilot users can now enjoy natural language-powered coding with Copilot Chat at no additional cost for both Visual Studio Code and Visual Studio. It is also free to verified teachers, students, and maintainers of popular open-source projects.

What is GitHub Copilot Chat? GitHub Copilot Chat is a chat interface that lets you interact with GitHub Copilot, an AI-powered coding assistant, within supported IDEs. You can use GitHub Copilot Chat to ask and receive answers to coding-related questions, get code suggestions, explanations, unit tests, and bug fixes, and learn new languages or frameworks.

GitHub Copilot Chat is powered by OpenAI’s GPT-4 model, which is fine-tuned specifically for dev scenarios. You can prompt GitHub Copilot Chat in natural language to get help with learning new languages or frameworks, troubleshooting bugs, writing unit tests, detecting vulnerabilities, and more. GitHub Copilot Chat also supports multiple languages, so you can communicate with it in your preferred language.

To get started with GitHub Copilot Chat, you need to sign up for GitHub Copilot and install the GitHub Copilot extension in your IDE. You can then access GitHub Copilot Chat from the sidebar and start chatting with it. In Visual Studio, go to the View menu and select “GitHub Copilot Chat” to show the pane.

Then ask it a question…

You can also check out the documentation and the FAQ for more information.

Enjoy!

Reference

GitHub Copilot Chat is now generally available for organizations and individuals – The GitHub Blog

Highlights from Microsoft Build 2021 | Digital Event

I’m happy to announce a Highlights from Microsoft Build 2021 digital event next Thursday, July 15. Please join me and other local experts as we look to provide key insights from the event that will help you expand your skillset, find technical solutions, and innovate for the challenges of tomorrow.

Here are the topics that will be covered:

- .NET 6 and ASP.NET Core 6 and C#10

- Internet of Things

- DevOps

- Kubernetes

- Power Platform

- Artificial Intelligence

- Azure Functions

- Entity Framework

- Power BI

For more details about this event, please visit https://www.meetup.com/CTTDNUG/events/279130746/

Enjoy!

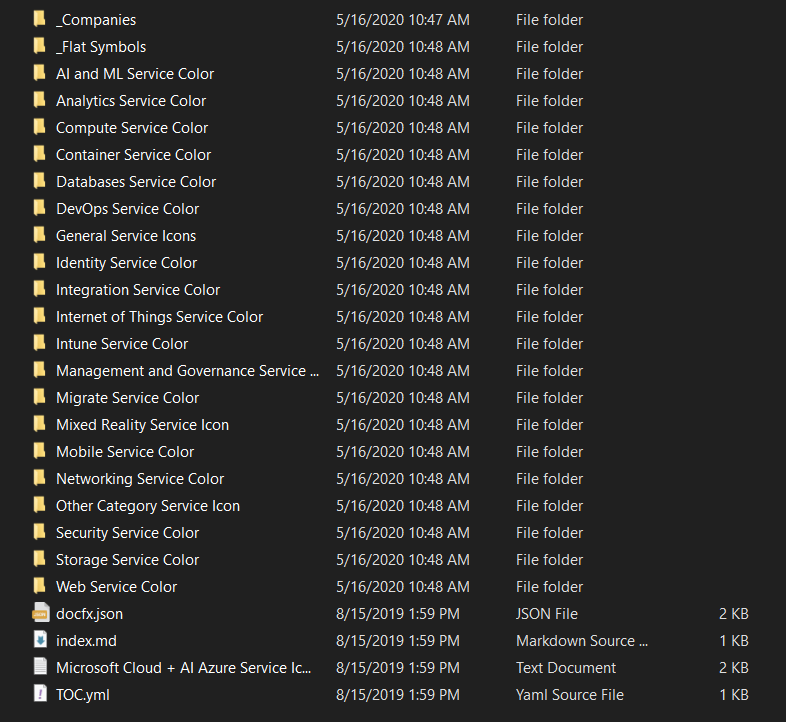

Microsoft Azure Icon/Symbol Resources

Architecture diagrams are a great way to communicate your design, deployment, topology or simply to be used for training decks, documentation, books and videos.

When it comes to Azure icons there are a few resources available to you, but keep in mind that Microsoft reserves the right to not allow certain uses of these symbols, stop their use, or ask for their removal from use regardless of the source.

Microsoft Azure Cloud and AI Symbol / Icon Set – SVG

This package contains a set of symbols/icons to visually represent resources for Microsoft Azure and related cloud and on-premises technologies. Once downloaded, you can drag and drop the SVG files into PowerPoint or Visio and other tools that accept SVG format, and you don’t need to import anything into Visio.

The latest version at the time of this post is v2019.9.11 and was updated September 19, 2019 and only contains SVG image files now.

You can download the resources from here https://www.microsoft.com/en-us/download/details.aspx?id=41937

Azure Icon Pack for draw.io / diagrams.net

If you’re familiar with draw.io (soon to be renamed to diagrams.net), then your in luck as there is an icon pack for Microsoft Azure cloud resources. These are the same images that were originally created by Microsoft.

You can download and access these icons from the GitHub repository which will load them up in draw.io https://github.com/ourchitecture/azure-drawio-icons

Azure.Microsoft.com UX Patterns

This is a comprehensive list of icons that at the time of the post is made up of up 1388 icons.

You can access the resources from here https://azure.microsoft.com/en-us/patterns/styles/glyphs-icons/

Amazing Icon Downloader

With the Amazing Icon Downloader extension for Chrome or the new Microsoft Edge (chromium based), you can easily find and download SVG icons from the Azure portal.

When you’re in the Azure portal the extension will activate and automatically show you all the icons present for the view your in. As you will see some of the icons are named and so are easy to search for, however other icons have the following naming convention FxSymbol0-097 for example.

You can download the Amazing Icon Downloader extension from the Chrome web store https://chrome.google.com/webstore/detail/amazing-icon-downloader/kllljifcjfleikiipbkdcgllbllahaob/

Conclusion

The icons are designed to be simple so that you can easily incorporate them in your diagrams and put them in your whitepapers, presentations, datasheets, posters, or any technical material.

Until recently I mostly used the Microsoft Azure Cloud and AI Symbol / Icon Set. Although it seems to be updated on a yearly basis, I find it still lags in keeping up with the changes happening in Azure. Since I like using the current Azure icons, I prefer using the Amazing Icon Downloader as I’m usually in the Azure Portal working with a particular resource and its quick to just grab that icon.

If you know of any other resources please let me know and I’ll update this list.

Enjoy!

Resources

Related post: https://theflyingmaverick.com/2018/01/29/microsoft-azure-symbol-icon-set-download-visio-stencil-png-and-svg/

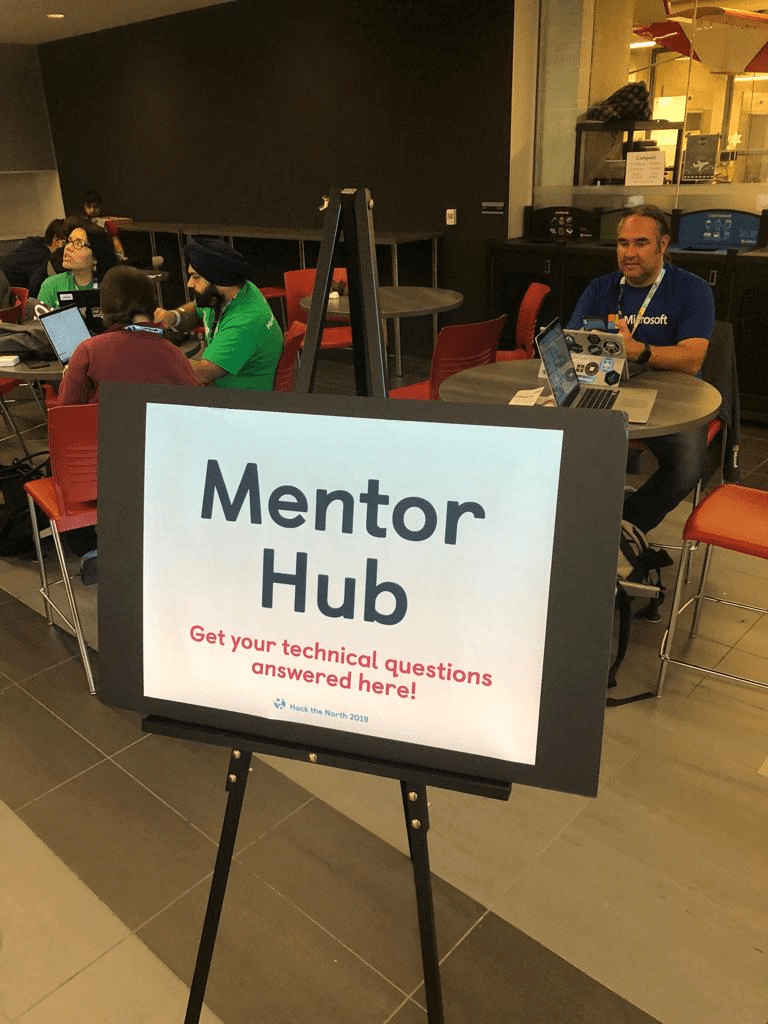

Hack the North Recap (2019)

Last week was Canada’s biggest hackathon called Hack the North, where 1,500 students from all around the world at the University of Waterloo to build something amazing over 36 hours. I had the opportunity to be a mentor and help these smart kids out with their creations.

This is my second hackathon, the first being the UofTHacks VI from earlier in the year. Both are very different from one another but had the same drive and passion from the students and it was amazing to see what they were building.

Here is a gallery of the day.

That’s a wrap. I look forward to my next hackathon in 2020.